When preparing for the CKS exam, you have to train a lot with tasks that involve making changes to /etc/kubernetes/manifests/kube-apiserver.yaml. This file is monitored by the Kubelet service, which runs the kube-apiserver process in a container. If the Kubelet detects a chance in that file, it restarts the container with the updated configuration.

In successful scenarios, kube-apiserver's container stops for a few minutes and then returns. However, if there is an error, it might not come back.

We'll show you steps you can follow to get back on track, especially if you are preparing for any of the Linux Foundation's Kubernetes certifications.

The testing environment

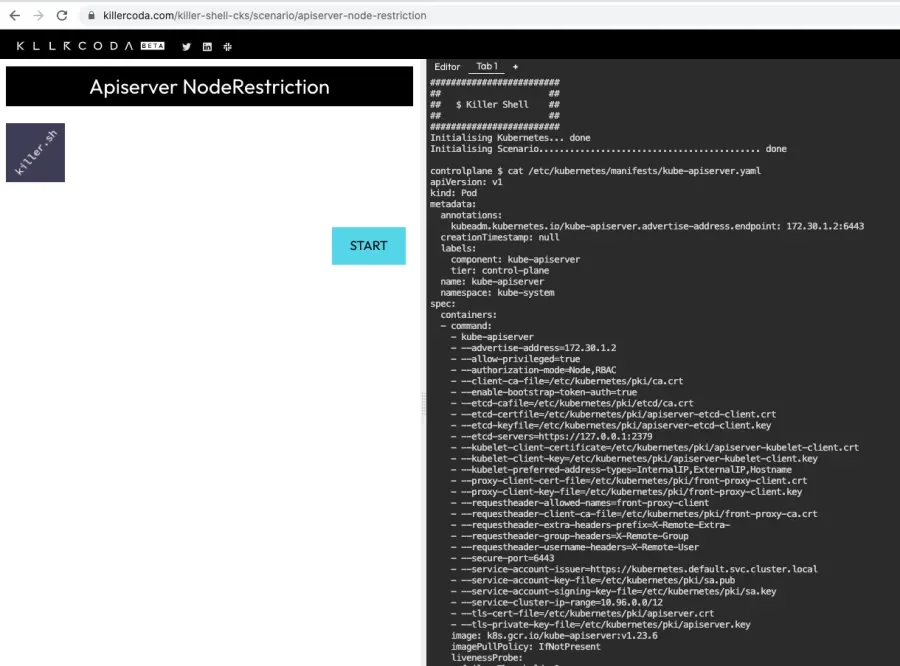

Let's take one of Kim Wuestkamp's killercoda challenges where we have an ephemeral cluster that we can tinker with. Here is an active environment with the printed the contents of /etc/kubernetes/manifests/kube-apiserver.yaml:

We can see that there is a command called kube-apiserver, which receives a long list of options, each in its own line. We will start by changing something that we know will work, then forge ahead from there.

Making a change

The change we are going to make is about forbidding the creation of privileged containers. Per the official documentation, this is about turning the option --allow-privileged=true into --allow-privileged=false.

Before making the change, it is recommended to make a copy of the existing configuration. You can just copy it into the home directory with the following command:

cp /etc/kubernetes/manifests/kube-apiserver.yaml .

Now let's make the actual change in the file with an editor like VIM. Open the file, make the change described above, save, and quit.

TIP: In a production environment, Kubernetes configuration might be managed via configuration in code. However, the steps to monitor and analyze the result of a change explained below should still be helpful.

Monitor the change

The Kubelet will detect the file change and restart the kube-apiserver container with the new configuration. We can track this by observing the running containers via the following command:

watch crictl ps

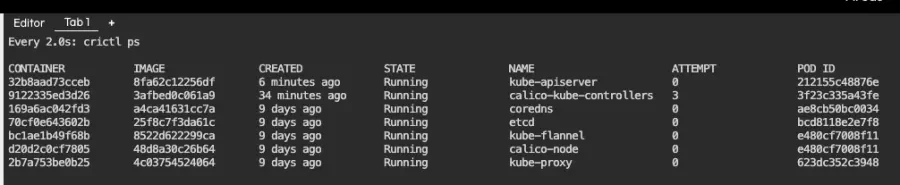

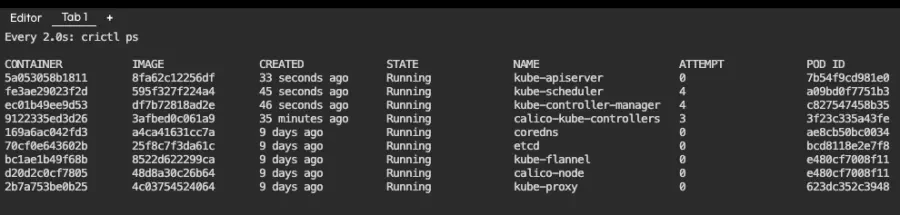

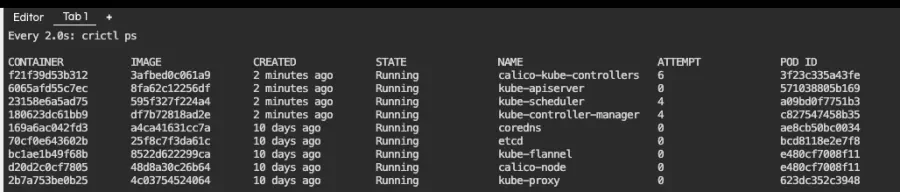

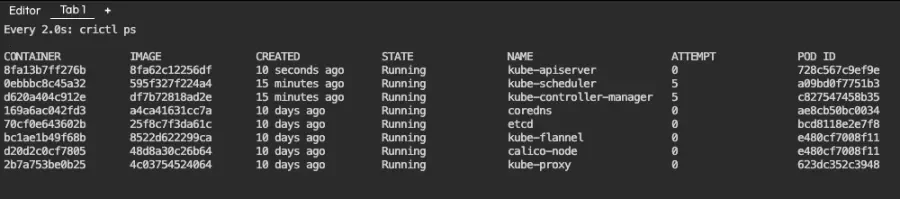

Here is the output, which refreshes every two seconds:

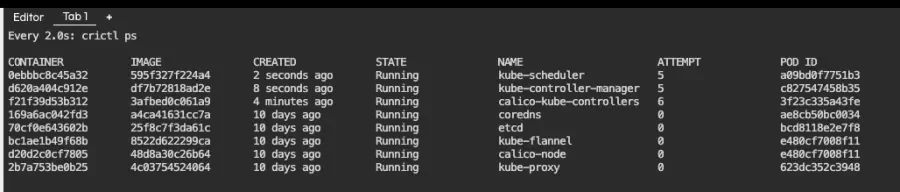

This listing shows our running containers. One of them is kube-apiserver, which has been running for 6 minutes. If everything goes well, it should vanish for a few seconds and reappear, like in the screenshot below:

TIP: In the above command, we use crictl instead of docker to list running containers. crictl is a command-line interface for CRI-compatible container runtimes. It is common to use CRI as the container runtime in Kubernetes instead of docker. If you are using docker, though, the command would be identical, only replacing crictl with docker.

Verify the change

Now that the kube-apiserver container is running, we can verify that it is using our change by searching for running processes:

ps aux | grep kube-apiserver | grep privileged

We can see that there is a kube-apiserver process that is using the option --allow-privileged=false, hence the change did take effect.

Dealing with failures

There are two kinds of errors:

- Bad syntax errors. These are caused by introducing an error at

/etc/kubernetes/manifests/kube-apiserver.yamlwhich makes its YAML invalid, and therefore the Kubelet stops the kube-apiserver container but does not start it back. - Incorrect configuration. This causes a container startup error, so the Kubelet will try to start the kube-apiserver container a few times before it desists.

In both cases, the steps to find out are the same, but understanding these two scenarios will help you to know where to look at. Let's look at each in turn.

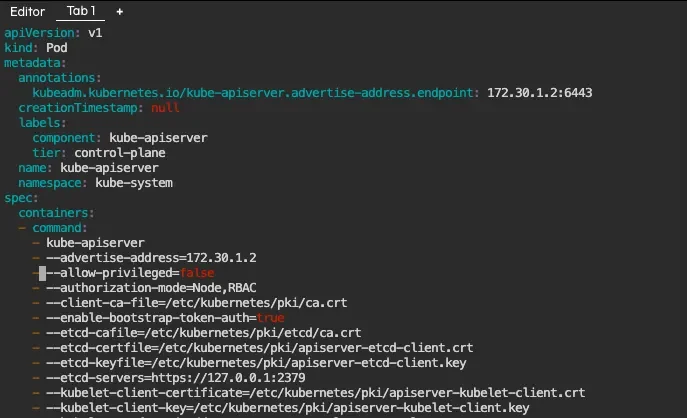

Bad syntax

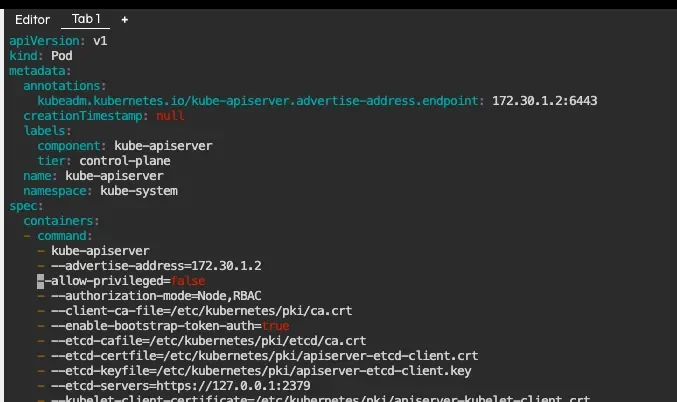

We will introduce a typo at /etc/kubernetes/manifests/kube-apiserver.yaml and then see what happens. The following screenshot shows an invalid array of options that are passed to the kube-apiserver command:

Now let's save the file and monitor containers as we did before. We list containers with the following command:

watch crictl ps

After a couple of minutes, kube-apiserver is gone and does not reappear:

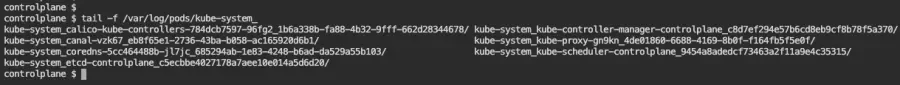

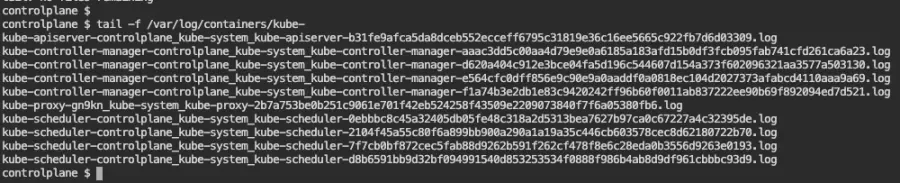

Time to look at the logs. These are under /var/log/pods or /var/log/containers. Use whatever you prefer. In this case, there is nothing since the container is not running, and it was deleted. The kube-apiserver logs are missing at /var/log/pods:

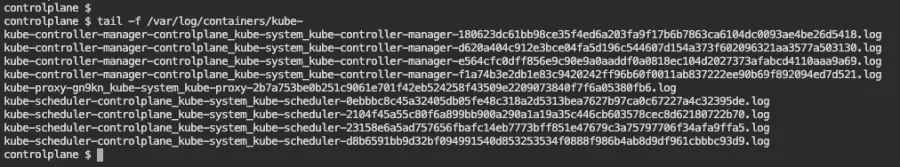

Now let's look at /var/log/containers:

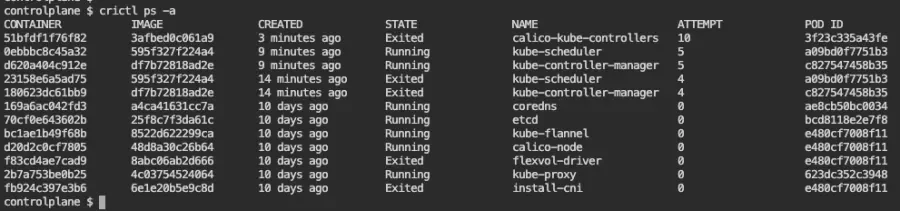

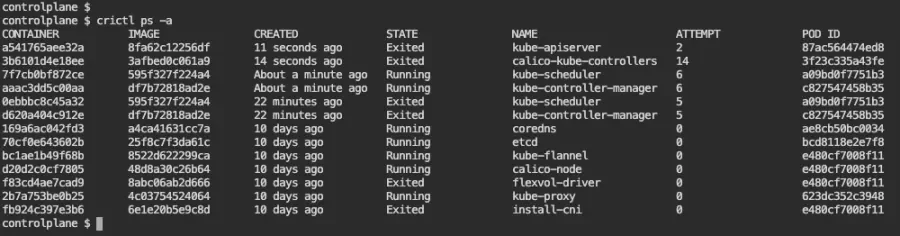

Next, let's list all containers in the system using crictl, including the ones that are not running:

crictl ps -a

If you get to this point, look for errors at journalctl, filtering them by kube-apiserver since there is usually a lot of output. You can do this with:

journalctl | grep apiserver

And here is the result, right at the bottom of the output:

May 13 13:25:49 controlplane kubelet[25181]: E0513 13:25:49.437945 25181 file.go:187] "Could not process manifest file" err="/etc/kubernetes/manifests/kube-apiserver.yaml: couldn't parse as pod(yaml: line 18: could not find expected ':'), please check config file" path="/etc/kubernetes/manifests/kube-apiserver.yaml"

We can see above that the manifest file /etc/kubernetes/manifests/kube-apiserver.yaml could not be processed. We even got the line number (18), which matches the line where we broke the array of options. Let's fix it and verify that the kube-apiserver gets back into life:

vim /etc/kubernetes/manifests/kube-apiserver.yaml

Then we save and monitor containers again. As expected, kube-apiserver is now running:

When we have introduced bad syntax, there will be no new containers and (unless you look quickly) no logs under /var/log. Therefore, we can find the error at journalctl.

Let's look at the other type of error that we may find.

Incorrect configuration

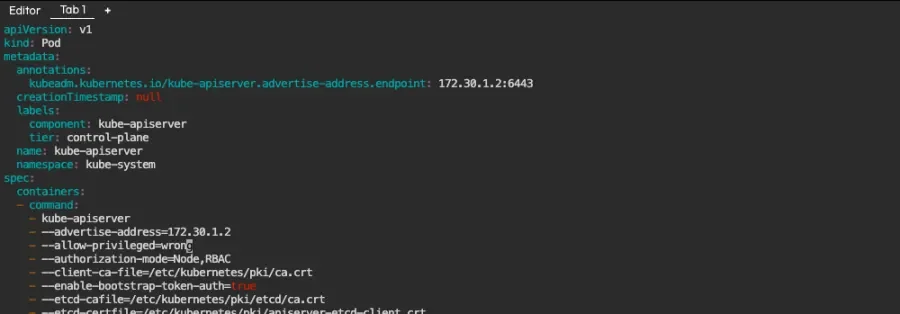

In this scenario, the manifest syntax is correct, but the configuration is not. This could happen when we set the wrong value to an option, such as an incorrect data type or an incorrect volume path. Let's see an example.

We will use the same option we have been using in this article, allow-privileged, and this time we will set it to wrong. The resulting manifest is valid YAML, but when the Kubelet starts the container, it will fail and enter a loop in which it tries to start and crashes. Let's start by making the change:

vim /etc/kubernetes/manifests/kube-apiserver.yaml

We then save the file and monitor containers:

watch crictl ps

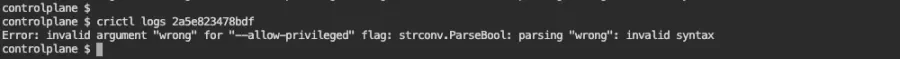

Look at the listing above, in which kube-apiserver appears as STATE Exited, and there have been two attempts to start it, the last one 11 seconds ago. We can dig deeper by looking at the logs of the container of the previous attempt by copying the container identifier in the CONTAINER column (2a5e823478bdf), and then using crictl logs 2a5e823478bdf:

There is the error. Now that we know what to fix, let's look at container logs to see if we can find this error there:

Nothing there. Now let's look at pod logs:

Nothing there either. Finally, let's look at the error at journalctl:

journalctl | grep kube-apiserver

The above command returns a lot of error messages since there are other services trying to reach out to the kube-apiserver and failing. None of the errors is the one that we found in the container logs via crictl.

Summary

Here is a suggested list of steps that sums up all the above examples:

- Make a copy of

kube-apiserver.yamlbefore you make changes to it. - Make the change at

kube-apiserver.yamland save it. - Monitor containers with

watch crictl psorwatch docker psdepending on the container runtime that the cluster uses. - If the

kube-apiserverdoes not reappear after a couple of minutes:- Look at container logs with

crictl ps -a. If you see akube-apiservercontainer, inspect its logs withcrictl logs [id of the container]. If you identify the error, fix it atkube-apiserver.yamland go back to step 3. - If you did not find the error, search for it at

journalctlusingjournalctl | grep api-server. Try to find the error there. - If you did not find the error at

journalctl, look at the container and pod logs at/var/log.

- Look at container logs with

- There are cases where the Kubelet did stop the kube-apiserver container but did not start it again. You can force it to do so with

systemctl restart kubelet.service. That should attempt to start kube-apiserver and log an error atjournalctlif it failed.

Conclusion

This guide should help you to track configuration errors at kube-apiserver. It requires patience and practice until you get fluent with these commands and the kind of output you will find. You can start practicing now by solving the challenges that involve the apiserver at https://killercoda.com.