Following up with the first three parts of the Continuous Deployment, Infrastructure as Code, and Drupal series, where we used DigitalOcean as the cloud provider, in this article we will see how to set up a continuous deployment workflow that uses Google Cloud. Based on the promise of the Cloud Native Foundation, it should be very similar.

We have set up a demo repository where you can discover the following resources that we will cover in the following sections:

- A GitHub Actions workflow, which builds a Docker image, pushes it to GitHub Packages, and then orders Kubernetes to deploy it into the cluster.

- A set of Kubernetes objects that define the architecture of the web application.

Let’s begin with an overview of the cluster setup.

The Kubernetes cluster setup

We begin by creating a Kubernetes cluster at Google Cloud’s Kubernetes Engine. The free tier is enough to accomplish what we want for this article so there is no need to spend money if you just want to tinker with Kubernetes at Google Cloud. Follow the steps at Create Cluster and leave the default options as they are.

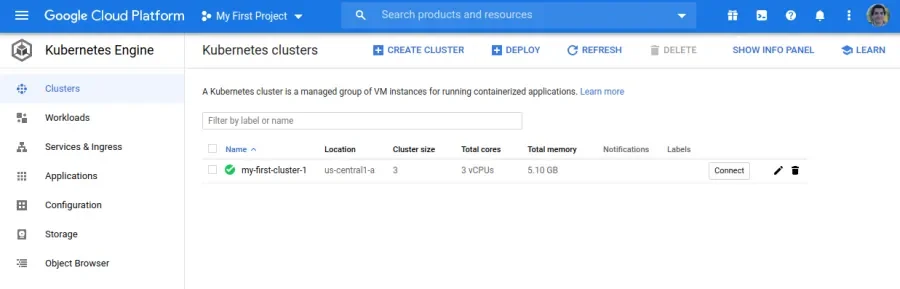

Here is a screenshot of the Kubernetes Engine dashboard after creating the cluster:

In order to interact with the cluster via the command line, we need to install the Google Cloud SDK. In particular, the gcloud command is the one we need to perform actions such as downloading cluster details or performing deployments. Here is a link to the gcloud installation instructions for all platforms.

With the SDK installed, we can download the cluster details to start issuing commands against it. Here is the command for doing so:

gcloud container clusters get-credentials my-first-cluster-1 --zone us-central1-a --project heroic-bliss-279608The above command downloads the cluster details and sets the cluster as the default context for kubectl, the command line interface to manage Kubernetes clusters. Here is how we can see the available clusters:

juampy@carboncete:~:$ kubectl config get-contexts

CURRENT NAME

do-sfo2-drupster

* gke_heroic-bliss-279608_us-central1-a_my-first-cluster-1The star above indicates the active context, meaning that kubectl is set to use the Google Cloud cluster that we just created. As for do-sfo2-drupster, this is the DigitalOcean cluster that we used at the Continuous Deployment, Infrastructure as Code, and Drupal series.

That’s it for the cluster setup. In the next section we will set up GitHub actions to perform deployments using these credentials but it is still useful to have access to the cluster from our local environment in order to analyze its state and manage secrets.

Continuous Deployment via GitHub Actions

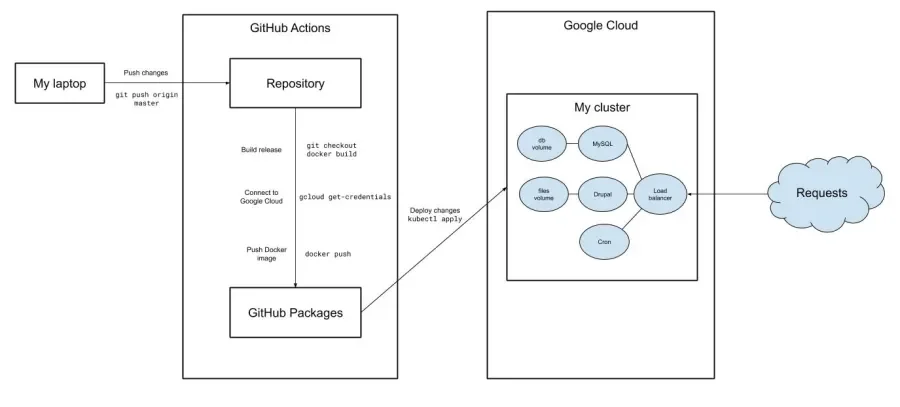

In this section we will analyze a GitHub Actions workflow. When code is pushed to master branch, it will perform the following tasks:

- Builds a Docker image containing the code and the operative system. Then pushes it to GitHub Packages.

- Installs gcloud: Google Cloud’s command line interface.

- Downloads credentials and details of the Kubernetes cluster at Google Cloud.

- Performs a deployment and updates the database.

Here is a diagram that illustrates the above:

Here are the contents of the GitHub Actions workflow:

https://github.com/juampynr/drupal8-gcloud/blob/master/.github/workflows/ci.yml

on:

push:

branches:

- master

name: Build and deploy

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v1

with:

fetch-depth: 1

- name: Build, push, and verify image

run: |

echo ${{ secrets.PACKAGES_TOKEN }} | docker login docker.pkg.github.com -u juampynr --password-stdin

docker build --tag docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:${GITHUB_SHA} .

docker push docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:${GITHUB_SHA}

docker pull docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:${GITHUB_SHA}

- name: Install gcloud

uses: GoogleCloudPlatform/github-actions/setup-gcloud@master

with:

version: '295.0.0'

project_id: ${{ secrets.GCP_PROJECT_ID }}

service_account_key: ${{ secrets.GCP_SA_KEY }}

export_default_credentials: true

- name: Check gcloud credentials

run: |

gcloud container clusters get-credentials ${{ secrets.GCP_CLUSTER_ID }} --zone us-central1-a --project ${{ secrets.GCP_PROJECT_ID }}

kubectl config get-contexts

- name: Deploy to Google Cloud

run: |

sed -i 's|<IMAGE>|docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:'${GITHUB_SHA}'|' $GITHUB_WORKSPACE/kubernetes/drupal-deployment.yaml

sed -i 's|<DB_PASSWORD>|${{ secrets.DB_PASSWORD }}|' $GITHUB_WORKSPACE/kubernetes/drupal-deployment.yaml

sed -i 's|<DB_PASSWORD>|${{ secrets.DB_PASSWORD }}|' $GITHUB_WORKSPACE/kubernetes/mysql-deployment.yaml

sed -i 's|<DB_ROOT_PASSWORD>|${{ secrets.DB_PASSWORD }}|' $GITHUB_WORKSPACE/kubernetes/mysql-deployment.yaml

kubectl apply -k kubernetes

kubectl rollout status deployment/drupal

- name: Update database

run: |

POD_NAME=$(kubectl get pods -l tier=frontend -o=jsonpath='{.items[0].metadata.name}')

kubectl exec $POD_NAME -c drupal -- vendor/bin/robo files:configure

kubectl exec $POD_NAME -c drupal -- vendor/bin/robo database:updateIf you have read Continuous Deployment, Infrastructure as Code, and Drupal: Part 2, then the above will look familiar. The step to build and push a Docker image containing the application is identical to the step to perform a deployment and update the database. What changes? How to authenticate against Google Cloud. Let’s see that in further detail.

Building and pushing the Docker image

Once GitHub Actions has checked out the code, it performs the following step in which it builds a Docker image, then pushes it to the Docker registry within the GitHub project (aka GitHub Packages), and finally pulls the image. Here is the step:

https://github.com/juampynr/drupal8-gcloud/blob/master/.github/workflows/ci.yml

- name: Build, push, and verify image

run: |

echo ${{ secrets.PACKAGES_TOKEN }} | docker login docker.pkg.github.com -u juampynr --password-stdin

docker build --tag docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:${GITHUB_SHA} .

docker push docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:${GITHUB_SHA}

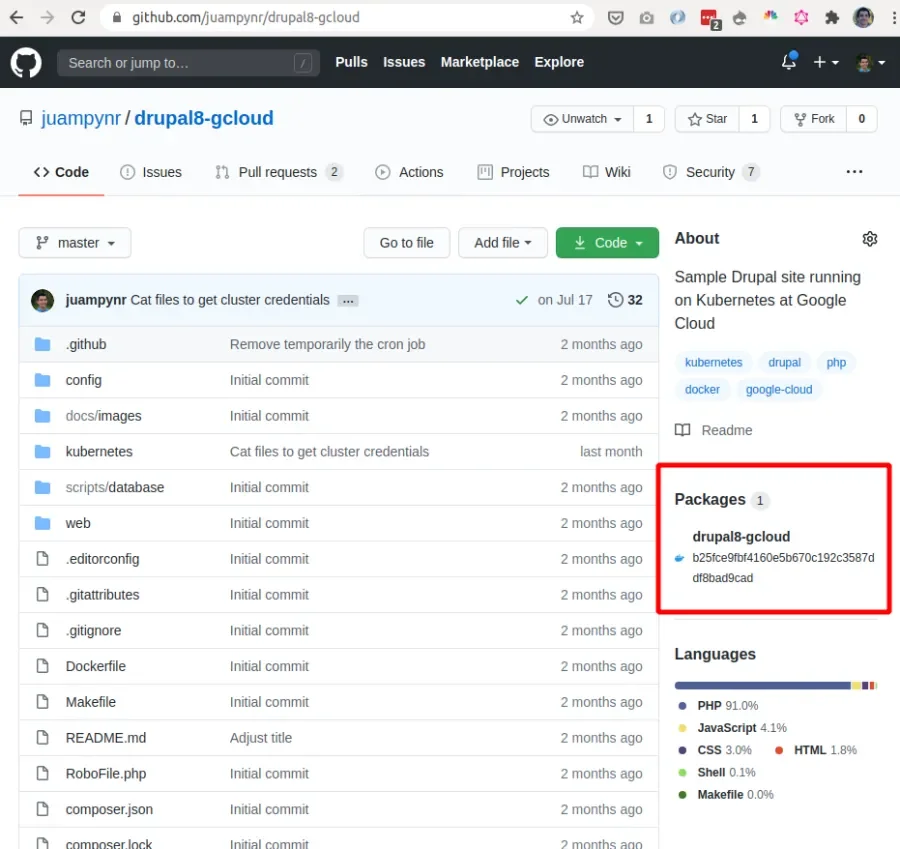

docker pull docker.pkg.github.com/juampynr/drupal8-gcloud/drupal8-gcloud:${GITHUB_SHA}Each time code is pushed to master branch, GitHub Actions will build a Docker image and store it along with the repository as a Package. Here you can see the section within the repository where you can access to all available Packages. Each package is a Docker image containing all the required files to run the application such as Composer dependencies or configuration files:

The reason why the step ends with a docker pull is because docker push may fail due to a network error, in which case we don’t want to proceed with the workflow. docker pull will attempt to fetch the image from GitHub Packages and return an error if it wasn’t able to do so.

Installing Google Cloud

We are using the official Google Cloud action to authenticate against Google Cloud. Here is the step within the GitHub Actions workflow:

https://github.com/juampynr/drupal8-gcloud/blob/master/.github/workflows/ci.yml

- name: Install gcloud

uses: GoogleCloudPlatform/github-actions/setup-gcloud@master

with:

version: '295.0.0'

project_id: ${{ secrets.GCP_PROJECT_ID }}

service_account_key: ${{ secrets.GCP_SA_KEY }}

export_default_credentials: trueThe setup-gcloud command requires the following parameters:

- The project id, which we have stored as a GitHub Secret.

- A Service Account, which is a secret encoded as a Base64 string.

A service account, according to the official docs at Google’s Security and Identity, “is a special kind of account used by an application or a virtual machine (VM) instance, not a person. Applications use service accounts to make authorized API calls.”. The main benefit of service accounts is that they save you from having to enter your personal account details (in this case, your Google username and password) on remote services.

You can find the steps to create a service account in the Google Cloud documentation. Make sure that you select the role Kubernetes Engine Developer role. The result will be a json file that you can encode via cat key.json | base64 and save it as a GitHub secret.

Downloading the cluster details

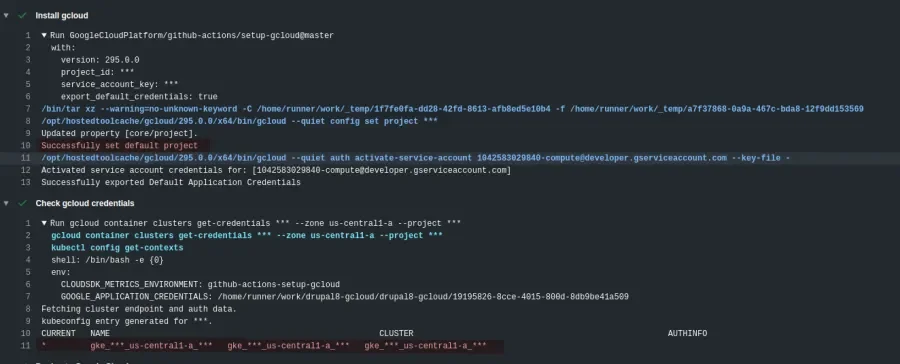

Once we have authenticated against Google Cloud via the GitHub action in the previous section, downloading the cluster details is a trivial task since gcloud has a command to accomplish just that. Here is the step within the GitHub Actions workflow:

https://github.com/juampynr/drupal8-gcloud/blob/master/.github/workflows/ci.yml

- name: Check gcloud credentials

run: |

gcloud container clusters get-credentials ${{ secrets.GCP_CLUSTER_ID }} --zone us-central1-a --project ${{ secrets.GCP_PROJECT_ID }}

kubectl config get-contextsThe first command, gcloud container clusters get-credentials, is what downloads the credentials and stores them so future kubectl commands can use them. The second command, kubectl config get-contexts, is just a verification to print out the available Kubernetes clusters. Here is the output of this and the previous step for a GitHub Actions run:

Running cron jobs via Kubernetes

The set of Kubernetes objects that the demo repository uses is located at https://github.com/juampynr/drupal8-gcloud/tree/master/kubernetes. These are are almost identical to the ones used for a Kubernetes cluster hosted at DigitalOcean, which we covered in detail at Continuous Deployment, Infrastructure as Code, and Drupal: part 3. What’s different for Google Cloud is how we make the CronJob object to authenticate and run Drupal cron. Here are its contents:

https://raw.githubusercontent.com/juampynr/drupal8-gcloud/master/kubernetes/drupal-deployment.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: drupal-cron

spec:

schedule: "*/2 * * * *"

concurrencyPolicy: Forbid

jobTemplate:

spec:

template:

spec:

containers:

- name: drupal-cron

image: juampynr/gcloud-cronjob:latest

volumeMounts:

- name: google-cloud-key

mountPath: /var/secrets/google

command: ["/bin/sh","-c"]

args:

- gcloud auth activate-service-account --key-file=/var/secrets/google/key.json;

gcloud container clusters get-credentials `cat /var/secrets/google/cluster_id` --zone=`cat /var/secrets/google/cluster_zone` --project=`cat /var/secrets/google/project_id`;

POD_NAME=$(kubectl get pods -l tier=frontend -o=jsonpath='{.items[0].metadata.name}');

kubectl exec $POD_NAME -c drupal -- vendor/bin/drush core:cron;

volumes:

- name: google-cloud-key

secret:

secretName: creds

restartPolicy: OnFailureThere are a few things worth pointing out from the above object:

schedule: "*/2 * * * *"means that Kubernetes will execute the contents of thecommandsection every two minutes.concurrencyPolicy: Forbidavoids running cron if it is already running.image: juampynr/gcloud-cronjob:latestis a Docker image that contains thegcloudandkubectlcommands.- There are a few secrets that

gcloudrequires in order to authenticate against Google Cloud and then identify and download the cluster details. We have created a Kubernetes secret with them and mounted such secret as a volume at/var/secrets/google. - Finally, the args section sends a command to the container running Drupal via

kubectl exec $POD_NAME -c drupal -- vendor/bin/drush core:cron, which runs Drupal cron.

Conclusion

So far the promise of the Cloud Native Foundation is proven right: running a web application using Kubernetes is very similar using different cloud providers.

The main difference between hosting it at DigitalOcean versus Google Cloud is authentication, but once you have downloaded the Kubernetes cluster configuration, everything else is identical. This is a great advantage in terms of portability as it allows large projects to use multiple cloud providers and smaller ones to move from one cloud provider to another.

If you want to try this setup with any of your Drupal projects, feel free to copy the GitHub Actions workflow and the Kubernetes objects from the demo repository.