A few months ago, CrashPlan announced that they were terminating service for home users, in favor of small business and enterprise plans. I’d been a happy user for many years, but this announcement came along with more than just a significant price increase. CrashPlan removed the option for local computer-to-computer or NAS backups, which is key when doing full restores on a home internet connection. Also, as someone paying month-to-month, they gave me 2 months to migrate to their new service or cancel my account, losing access to my historical cloud backups that may only be 3 or more months old.

I was pretty unhappy with how they handled the transition, so I started investigating alternative software and services.

The Table Stakes

These are the basics I expect from any backup software today. If any of these were missing, I went on to the next candidate on my list. Surprisingly, this led to us updating our security handbook to remove recommendations for both Backblaze and Carbonite as their encryption support is lacking.

Backup encryption

All backups should be stored with zero-knowledge encryption. In other words, a compromise of the backup storage itself should not disclose any of my data. A backup provider should not require storing any encryption keys, even in escrow.

Block-level deduplication at the cloud storage level

I don’t want to ever pay for the storage of the same data twice. Much of my work involves large archives or duplicate code shared across multiple projects. Local storage is much cheaper, so I’m less concerned about the costs there.

Block-level deduplication over the network

Like all Lullabots, I work from home. That means I’m subject to an asymmetrical internet connection, where my upload bandwidth is significantly slower compared to my download bandwidth. For off-site backup to be effective for me, it must detect previously uploaded blocks and skip uploading them again. Otherwise, the weeks it could take for an initial backup could take months and never finish.

Backup archive integrity checks

Since we’re deduplicating our data, we really want to be sure it doesn't have errors in it. Each backup and its data should have checksums that can be verified.

Notification of errors and backup status over email

The only thing worse than no backups is silent failures of a backup system. Hosted services should monitor clients for backups, and email when they don’t back up for a set period of time. Applications should send emails or show local notifications on errors.

External drive support

I have an external USB hard drive I use for archived document storage. I want that to be backed up to the cloud and for backups to be skipped (and not deleted) when it’s disconnected.

The Wish List

Features I would really like to have but could get by without.

- Client support for macOS, Linux, and Windows. I’ll deal with OS-specific apps if I have to, but I liked how CrashPlan covered almost my entire backup needs for my Mac laptop, a Windows desktop, and our NAS.

- Asymmetric encryption instead of a shared key. This allows backup software to use a public key for most operations, and keep the private key in memory only during restores and other operations.

- Support for both local and remote destinations in the same application.

- “Bare metal” support for restores. There’s nothing better than getting a replacement computer or hard drive, plugging it in, and coming back to an identical workspace from before a loss or failure.

- Monitoring of files for changes, instead of scheduled full-disk re-scans. This helps with performance and ensure backups are fresh.

- Append-only backup destinations, or versioning of the backup destination itself. This helps to protect against client bugs modifying or deleting old backups and is one of the features I really liked in CrashPlan.

My Backup Picks

Arq for macOS and Windows Cloud Backup

Arq Backup from Haystack software should meet the needs of most people, as long as you are happy with managing your own storage. This could be as simple as Dropbox or Google Drive, or as complex as S3 or SFTP. I ended up using Backblaze B2 for all of my cloud storage.

Arq is an incredibly light application, using just a fraction of the system resources that CrashPlan used. CrashPlan would often use close to 1GB of memory for its background service, while Arq uses around 60MB. One license covers both macOS and Windows, which is a nice bonus.

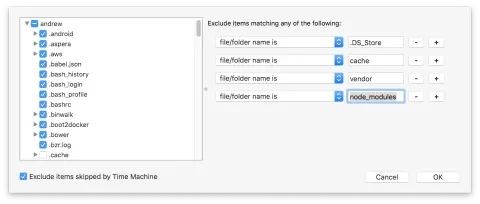

See Arq’s documentation to learn how to set it up. For developers, setting up exclude patterns significantly helps with optimizing backup size and time. I work mostly with PHP and JavaScript, so I ignore vendor and node_modules. After all, most of the time I’ll be restoring from a local backup, and I can always rebuild those directories as needed.

Arq on Windows is clearly not as polished as Arq on macOS. The interface has some odd bugs, but backups and restores seem solid. You can restore macOS backups on Windows and vice-versa, though some metadata and permissions will be lost in the process. I’m not sure I’d use Arq if I worked primarily in Windows. However, it’s good enough that for me it wasn't’ worth the time and money to set up something else.

Arq is missing Linux client support, though it can back up to any NAS over a mount or SFTP connection.

Like many applications in this space, theoretically, the client can corrupt or delete your existing backups. If this is a concern, be sure to set up something like Amazon S3’s lifecycle rules to preserve your backup set for some period of time via server-side controls. This will increase storage costs slightly but also protects against bugs like this one that mistakenly deleted backup objects.

There are some complaints about issues restoring backups. However, it seems like there are complaints about every backup tool. None of my Arq-using colleagues have ever had trouble. Since I’m using different tools for local backups, and my test restores have all worked perfectly, I’m not very concerned. This post about how Arq blocks backups during verification is an interesting (if overly ranty) read and may matter if you have a large dataset and a very slow internet connection. For comparison, my backup set is currently around 50 GB and validated in around 30 minutes over my 30/5 cable connection.

Time Machine for macOS Local Backup

Time Machine is really the only option on MacOS for bare-metal restores. It supports filesystem encryption out of the box, though backups are file level instead of block level. It’s by far the easiest backup system I’ve ever used. Restores can be done through Internet Recovery or through the first-run setup wizard on a new Mac. It’s pretty awesome when you can get a brand-new machine, start a restore, and come back to a complete restore of your old environment, right down to open applications and windows.

Time Machine Network backups (even to a Time Capsule) are notoriously unreliable, so stick with an external hard drive instead. Reading encrypted backups is impossible outside of macOS, so have an alternate backup system in place if you care about cross-OS restores.

File History Windows Local Backup

I set up File History for Windows in Bootcamp and a Windows desktop. File History can back up to an external drive, a network share, or an iSCSI target (since those just show up as additional disks). Network shares do not support encryption with BitLocker, so I set up iSCSI by following this guide. This works perfectly for a desktop that’s always wired in. For Bootcamp on my Mac, I can’t save the backup password securely (because BitLocker doesn’t work with Bootcamp), so I have to remember to enter it on boot and check backups every so often.

Surprisingly, it only backs up part of your user folder by default, so watch for any Application Data folders you want to add to the backup set.

It looked like File History was going to be removed in the Fall Creator’s Update, but it came back before the final release. Presumably, Microsoft is working on some sort of cloud-backup OneDrive solution for the future. Hopefully, it keeps an option for local backups too.

Duply + Duplicity for Linux and NAS Cloud Backup

Duply (which uses duplicity behind the scenes) is currently the best and most reliable cloud backup system on Linux. In my case, I have an Ubuntu server I use as a NAS. It contains backups of our computers, as well as shared files like our photo library. Locally, it uses RAID1 to protect against hardware failure, LVM to slice volumes, and btrfs + snapper to guard against accidental deletions and changes. Individual volumes are backed up to Backblaze B2 with Duply as needed.

Duplicity has been in active development for over a decade. I like how it uses GPG for encryption. Duplicity is best for archive backups, especially for large static data sets. Pruning old data can be problematic for Duplicity. For example, my photo library (which is also uploaded to Google Photos) mostly adds new data, with deletions and changes being rare. In this case, the incremental model Duplicity uses isn’t a problem. However, Duplicity would totally fall over backing up a home directory for a workstation, where the data set could significantly change each day. Arq and other backup applications us a “hash backup” strategy, which is roughly similar to how Git stores data.

I manually added a daily cron job in /etc/cron.daily/duply that backs up each data set:

#!/bin/bash

find /etc/duply -mindepth 1 -maxdepth 1 -exec duply \{} backup \;Note that if you use snapper, duplicity will try to back up the .snapshots directory too! Be sure to set up proper excludes with duply:

# although called exclude, this file is actually a globbing file list

# duplicity accepts some globbing patterns, even including ones here

# here is an example, this incl. only 'dir/bar' except it's subfolder 'foo'

# - dir/bar/foo

# + dir/bar

# - **

# for more details see duplicity manpage, section File Selection

# http://duplicity.nongnu.org/duplicity.1.html#sect9

- **/.cache

- **/.snapshotsOne more note; Duplicity relies on a cache of metadata that is stored in ~/.cache/duplicity. On Ubuntu, if you run sudo duplicity, $HOME will be that of your current user account. If you run it with cron or in a root shell with sudo -i, it will be /root. If a backup is interrupted, and you switch the method you used to elevate to root, backups may start from the beginning again. I suggest always using sudo -H to ensure the cache is the same as what cron jobs use.

About Cloud Storage Pricing

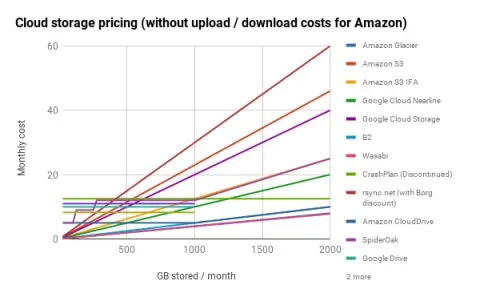

All of my finalist backup applications didn't offer any sort of cloud storage. Instead, they support a variety of providers including AWS, Dropbox, and Google Drive. If your backup set is small enough, you may be able to use storage you already get for free. Pricing changes fairly often, but this chart (and the underlying spreadsheet) should serve as a rough benchmark between providers. I’ve included the discontinued CrashPlan unlimited backup as a benchmark.

I ended up choosing Backblaze B2 as my primary provider. They offered the best balance of price, durability, and ease of use. I’m currently paying around $4.20 a month for just shy of 850GB of storage. Compared to Amazon Glacier, there’s nothing special to worry about for restores. When I first set up in September, B2 had several days of intermittent outages, with constant 503s. They’ve been fine in the months since, and changing providers down the line is fairly straightforward with Rclone. Several of my colleagues use S3 and Google’s cloud storage and are happy with them.

Hash Backup Apps are the Ones to Watch

There are several new backup applications in the “hash backup” space. Arq is considered a hash-backup tool, while Duplicity is an incremental backup tool. Hash backup tools hash blocks and store them (similar to how Git works), while other backup tools use a different model with an initial backup and then a chain of changes (like CVS or Subversion). Based on how verification and backups appeared to work, I believe CrashPlan also used a hash model.

Hash Backups

Incremental Backups

Garbage collection of expired backups is easy, as you just delete unreferenced objects. Deleting a backup in the middle of a backup timeline is also trivial.

Deleting expired data requires creating a new “full” backup chain from scratch.

Deduplication is easy since each block is hashed and stored once.

Deduplication isn’t a default part of the architecture (but is possible to include)

Data verification against a client can be done with hashes, which cloud providers can send via API responses, saving download bandwidth.

Data verification requires downloading the backup set and comparing against existing files.

Possible to deduplicate data shared among multiple clients.

Deduplication between clients requires a server in the middle.

I tried several of these newer backup tools, but they were either missing cloud support or did not seem stable enough yet for my use.

BorgBackup

BorgBackup has no built-in cloud support but can store remote data with SSH. It’s best if the server end can run Borg too, instead of just being a dumb file store. As such, it’s expensive to run, and wouldn’t protect against ransomware on the server.

While BorgBackup caches scan data, it walks the filesystem instead of monitoring it.

It’s slow-ish for initial backups as it only processes files one at a time, not in parallel. 1.2 hopes to improve this. It took around 20 minutes to do a local backup of my code and vagrant workspaces (lots of small files, ~12GB) to a local target. An update backup (with one or two file changes) took ~5 minutes to run. This was on a 2016 MacBook Pro with a fast SSD and an i7 processor. There’s no way it would scale to backing up my whole home directory.

I thought about off-site syncing to S3 or similar with Rclone. However, that means restoring the whole archive to restore. It also doubles your local storage space requirements - for example, on my NAS I want to back up photos only to the cloud since the photos directory itself is a backup.

Duplicacy

Duplicacy is an open-source but not free-software licensed backup tool. It’s obviously more open than Arq, but not comparable to something like Duplicity. I found it confusing that “repository” in it’s UI is the source of the backup data, and not the destination, unlike every other tool I tested. It intends for all backup clients to use the same destination, meaning that a large file copied between two computers will only be stored once. That could be a significant cost saving depending on your data set.

However, Duplicacy doesn’t back up macOS metadata correctly, so I can’t use it there. I tried it out on Linux, but I encountered bugs with permissions on restore. With some additional maturity, this could be the Arq-for-Linux equivalent.

Duplicati

Duplicati is a .Net application, but supported on Linux and macOS with Mono. The stable version has been unmaintained since 2013, so I wasn’t willing to set it up. The 2.0 branch was promoted to “beta” in August 2017, with active development. Version numbers in software can be somewhat arbitrary, and I’m happy to use pre-release version numbers that have been around for years with good community reports. Such a recent beta gave me pause on using this for my backups. Now that I’m not rushing to upload my initial backups before CrashPlan closed my account, I hope to look at this again.

HashBackup

HashBackup is in beta (but has been in use since 2010), and is closed source. There’s no public bug tracker or mailing list so it’s hard to get a feel for its stability. I’d like to investigate this further for my NAS backups, but I felt more comfortable using Duplicity as a “beta” backup solution since it is true Free Software.

Restic

Feature-wise, Restic looks like BorgBackup, but with native cloud storage support. Cool!

Unfortunately, it doesn't compress backup data at all. Deduplication may help with large binary files so it may not matter much in practice. It would depend on the type of data being backed up.

I found several restore bugs in the issue queue, but it’s only 0.7 so it’s not like the developers claim it’s production ready yet.

- Restore code produces inconsistent timestamps/permissions

- Restore panics (on macOS)

- Unable to backup/restore files/dirs with the same name

I plan on checking Restic out again once it hits 1.0 as a replacement for Duplicity.

Fatal Flaws

I found several contenders for backup that had one or more of my basic requirements missing. Here they are, in case your use case is different.

Backblaze

Backblaze’s encryption is not zero-knowledge. You have to give them your passphrase to restore. When you need to restore, they store your backup unencrypted on a server within a zip file.

Carbonite

Carbonite’s backup encryption is only supported for the Windows client. macOS backups are totally unencrypted!

CloudBerry

CloudBerry was initially promising, but it only supports continuous backup in the Windows client. While it does support deduplication, it’s file level instead of block level.

iDrive

iDrive file versions are very limited, with a maximum of 10 file versions for a file. In other words, expect that files being actively worked on over a week will lose old backups quickly. What’s the point of a backup system if I can’t recover a Word document from 2 weeks ago, simply because I’ve been editing it?

Rclone

Rclone is rsync for cloud storage providers. Rclone is awesome - but not a backup tool on its own. When testing Duplicity, I used it to push my local test archives to Backblaze instead of starting backups from the beginning.

SpiderOak

SpiderOak does not have a way to handle purging of historical revisions in a reliable manner. This HN post indicates poor support and slow speeds, so I skipped past further investigation.

Syncovery

Syncovery is a file sync solution that happens to do backup as well. That means it’s mostly focused on individual files, synced directly. It just feels too complex to be sure you have the backup setup right given the other features it has.

Syncovery is also file-based, and not block-based. For example, with Glacier as a target, you “cannot rename items which have been uploaded. When you rename or move files on the local side, they have to be uploaded again.”

Sync

I was intrigued by Sync as it’s one of the few Canadian providers in this space. However, they are really a sync tool that is marketed as a backup tool. It’s no better (or worse) than using a service like Dropbox for backups.

Tarsnap

Tarsnap is $0.25 per GB per month. Using my Arq backup as a benchmark (since it’s deduplicated), my laptop backup alone would cost $12.50 a month. That cost is way beyond the competition.

Have you used any of these services or software I've talked about? What do you think about them or do you have any others you find useful?