You did it. You helped launch a new web CMS platform for your organization and rolled it out to all of your stakeholders. It was hard work wrangling all of those requirements and synthesizing them into something everyone wanted to use. If people didn't get 100% of what they wanted, they at least got 80%, and that's 100% better than the last CMS they were forced to use.

If your organization is an archipelago, like a university or state government, you had to balance brand consistency and flexibility to get buy-in. You had to mediate compromises. You might have even had to sell the platform internally because you had no authority to force it on other schools, departments, or agencies. You had to empower content authors but also limit them with smart guardrails.

But the work isn't done. With a CMS serving so many needs, the work is never done. You've reached a significant milestone, but now you have new challenges.

As you rolled the platform out, you gathered your own set of things to improve. Stakeholders will also start submitting their new feature requests. This is to be expected. However, with a platform that powers so many websites with varying needs, this list can become a source of stress and conflict.

We've helped manage several large platform projects from conception to launch to long-term maintenance, and in this article, we'll help you answer these questions:

- How do you establish and enforce guidelines about what does and does not make it onto the platform?

- How do you communicate these values to your organization?

- How do you prioritize what makes it into the platform first?

- How do you close the communication loop and keep stakeholders up to date on the progress of their requests?

First, you need a scoring model.

What is a scoring model?

A scoring model is a tool used to assign comparative value to tasks, projects, and feature requests. It provides a prioritization framework that helps teams decide what will move forward and what will not move forward. All change requests can be compared against each other using a set of criteria aligned with the project's goals.

A scoring model provides many benefits.

- Standardizes decision-making against agreed-upon goals

- Helps you make more objective decisions that are less subject to personal interpretation and not based on who is yelling the loudest

- Provides support for denying a request or giving a request a low-priority

- Helps prevent you from slipping back into old habits that lead to one-off modifications and inconsistent user experiences across websites

The PRICE scoring model

We've created a variation of a popular scoring model called RICE. You'll find many examples, templates, and explanations about RICE scoring models online. When working with Georgia.gov, we found that we needed to add another criterion: Politics. This wasn't because Georgia.gov represents a political entity but because different state agencies and stakeholders have varying levels of influence and political clout within the organization.

Politics is relevant to any large platform project. Universities have departments and schools that carry more weight and importance. Large media organizations have subsidiaries that bring in more revenue. Usually, the value and distribution of political clout is an implicit but well-known modifier. Knowledge of it is passed down like ancient lore, with the lunch table and conference rooms replacing the campfire as stories and wisdom are shared. It sometimes explains the baffling decisions that get handed down.

Adding politics to the RICE scoring model makes it more explicit. Here's a quick summary of the entire thing.

- Politics - Who made the request, and how much political impact or influence does the individual or group have?

- Reach - If this change is made, how widespread will its effects be? Does the request make sense for the other sites on the platform?

- Impact - How will this change impact all platform sites?

- Confidence - Is the request based on one person's conviction, or is there data to back up the validity of the request?

- Effort - How much development time or effort is required to complete the request?

View an example PRICE spreadsheet. You'll notice we have a Pre-effort column. This is calculated based on the Politics, Reach, Impact, and Confidence columns and represents how important you think the request is before knowing how much development effort it will take.

In other words, a conversation needs to happen before involving developers.

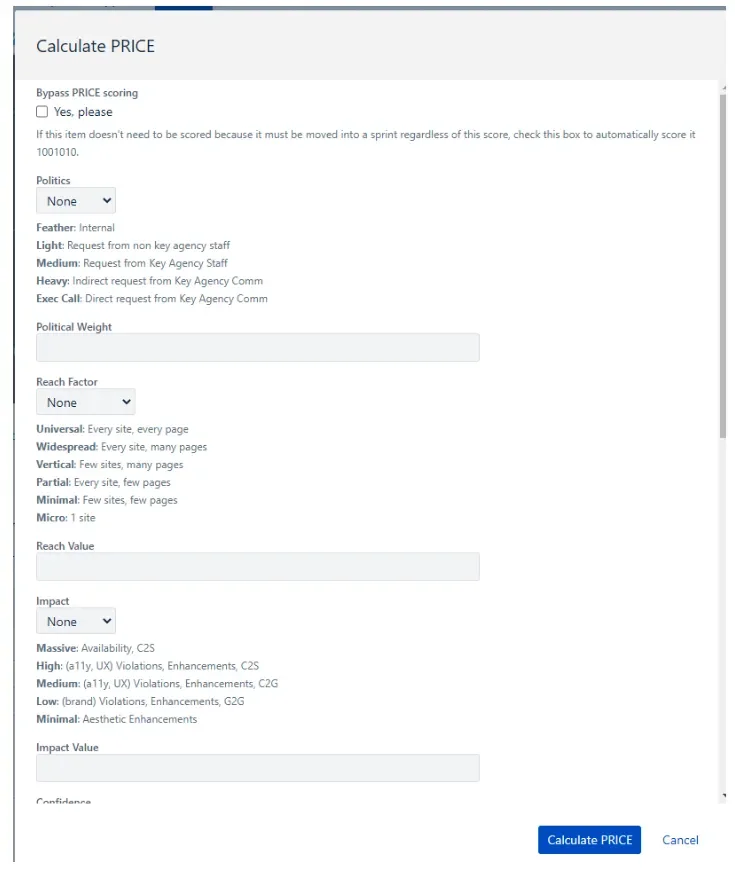

All of the terminology and lookup tables in this sheet should be changed to fit your own evaluation criteria, but we feel these provide a good starting point. For example, in the Politics dropdown sample, we include options like Feather, Light, Heavy, Medium, and Executive Stakeholder, each with a brief description. If these options don't work for your organization, change them to something that fits.

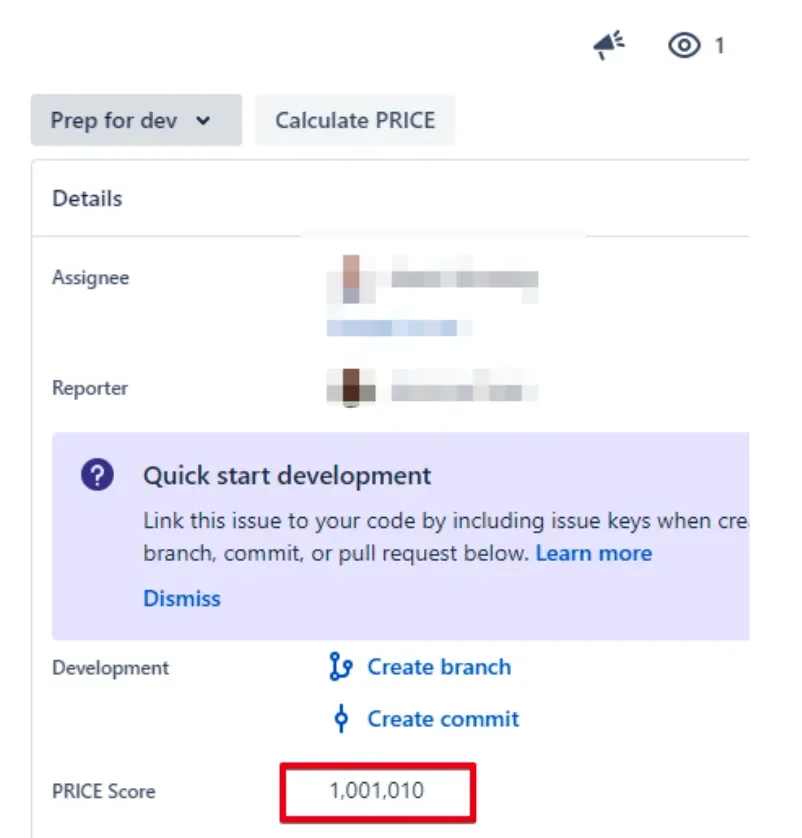

If you use JIRA, you can incorporate this model into your ticket workflow.

Using the PRICE model

You probably don't want to share the raw scoring worksheet, but that depends on your internal clients and culture. At the minimum, you should communicate the criteria for making decisions. Saying "no" is hard. Having something to back up your decisions makes saying "no" a little easier.

Above all, provide clear communication. Don't leave your stakeholders wondering what's going on. Acknowledge that a request has been received and is being evaluated. Provide a timeline of when (or if) it will be completed. Give regular updates about your progress. Incorporate these into whatever process you implement.

When your stakeholders are informed, understand the goals of the platform, and have a grasp of the criteria you use to rank requests, you'll be able to say things like, "We measured your request against other priorities (or the goals of the project), and while we can see how this might benefit you, it may cause problems for the other sites on the platform. Let's revisit this in the future."

The lifecycle of a change request

You'll want to map out how each step looks and be clear about it. You won't be able to make every stakeholder happy all of the time, but you can keep them informed.

Request is submitted

How is this done? Do you use project management or ticketing software? Do you use email? Maybe there is a form on your intranet. Whatever you use, be consistent and make sure everyone knows how to use it.

Request is evaluated

You (or the governance team created for this task) evaluate and score the request for politics, reach, impact, and confidence. You involve the development team to get an effort score if it reaches a certain level.

Who is on the governance team? What score triggers talking with the development team? How long can stakeholders expect this process to take? That's something you'll need to figure out.

Request is approved or denied

If the request is approved, give your stakeholders the good news and give them a sense of the priority and timeframe. For example, "We're working on three features now that we expect to be released by XXX date, and we'll start your changes right afterward."

Once work starts, let them know and give them regular updates. Is there a digital board they can log in to to track this, or do you send a weekly report based on what's currently being worked on? There are many options, and you must choose what works best for your team. Whatever you decide on, communicate it clearly.

If the request is not approved, be sure to explain why. Go over the platform's goals again and communicate how the request undermines those goals. The request might go against best practices, like accessibility. Or, the request might have too big of an impact on too many other sites using the platform.

Remind the stakeholders of the selection criteria and help them understand your decision. Through the conversation, you might be able to settle on an acceptable compromise.

You can do this

Maintaining a platform that supports dozens, maybe even hundreds, of websites can be daunting. If you're not careful, the goals of the platform can be undermined, or your internal stakeholders become disillusioned. Both jeopardize the ROI of your accomplishments. The PRICE model provides a good starting point to ensure that doesn't happen.

We have helped roll out and maintain platforms for multisite systems for state governments like Georgia and universities like UMass Amherst. We know the challenges involved, and if you would like help navigating them, please contact us.