You’re in the middle of a standup giving an update on a particularly thorny bug. Suddenly, the PM starts interrupting, but you can’t understand what they’re saying. You both talk over each other for 20 seconds, until finally, you say “I’ll drop the Hangout and join with my phone”. And, just as you go to hang up, the PM’s words come in clearly. The standup continues.

These sorts of temporary, vexing issues with VoIP and video calls are somewhat normal for those who work online, either at home or in an office. Figuring out the root cause of packet loss and latency is difficult and time-consuming. Is it you or the other end? Your WiFi or your ISP? Or, did your phone start downloading a large update while you were on the call, creating congestion over your internet connection? If we can eliminate one of these variables, it will be much easier to fix latency when it does strike.

Other than throwing more money at your ISP (which might not even be possible), there is a solution to congestion: Quality of Service, or “QoS”, is a system where your router manages congestion, instead of relying on the relatively simple TCP congestion algorithms. At the core, the idea is that the router understands what types of traffic have low latency requirements (like video calls or games), and what types of traffic are “bulk”, like that new multi-gigabyte OS update.

QoS is by no means a new idea. The old WRT54G routers with the Tomato firmware have a QoS system that is still available today. Most prior QoS systems require explicit rules to classify traffic. For example:

- Any HTTP request up to 1 megabyte gets “high” priority

- Any DNS traffic gets “highest” priority

- Any UDP traffic on ports 19302 to 19309 (Google Hangouts voice and video) gets placed in “high” priority

- All other traffic is assigned the “low” priority

This works well when you have a small number of easily identified traffic flows. However, it gets difficult to manage when you have many different services to identify. Also, many services use common protocols to encapsulate their traffic to make it difficult to block or control. Skype is a classic example of this, where it intentionally does everything it can to prevent firewalls from identifying it’s traffic. HTTPS traffic is also difficult to manage. For example, some services use HTTPS for video chat, where latency matters. At the same time, many remote backup services also use HTTPS for uploads, where latency doesn’t matter. Untangling these two flows is very difficult with most QoS systems.

Ideally, DSCP would allow applications to self-identify their traffic into different classes, without needing classification rules at the firewall. Most applications don’t do this, and Windows in particular blocks applications from setting DSCP flags without special policy settings).

A new class of QoS algorithms has been researched and developed in the last decade, known as “Active Queue Management” or AQM. The goal of these algorithms is to be “knobless” - meaning, an administrator shouldn’t need to create and continually manage classification rules. I found this episode of the Packet Pushers Podcast to be invaluable in figuring out AQM. Let’s see how to set up AQM using the “FlowQueue CoDel” algorithm on our home router, and find out how much better it makes our internet connection.

The Software

First, you need to have a router that supports AQM. CoDel has been available in the Linux and FreeBSD kernels for some time, but most consumer and ISP-deployed routers ship ancient versions of either. I’m going to show how to set up with OPNSense, but similar functionality is available with OpenWRT, LEDE, and pfSense, and likely any other well-maintained router software.

Baseline Speed Tests

It’s important to measure your actual internet connection speed. Don’t go by what package your ISP advertises. There can be a pretty significant variance depending on the local conditions of the network infrastructure.

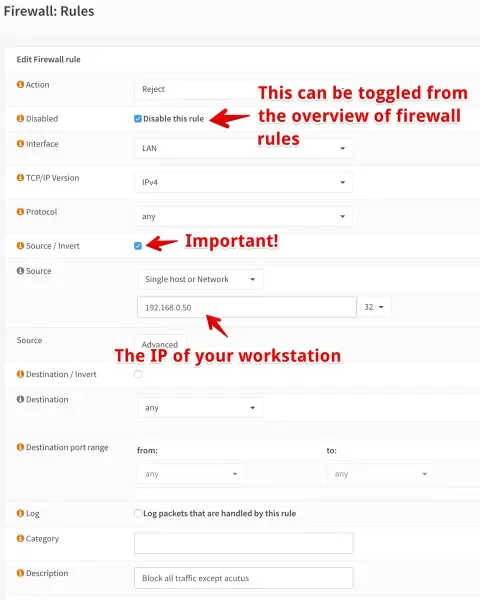

When testing, it’s important to be sure that no other traffic is being transmitted at the same time. It’s easiest to plug a laptop directly into your cable or DSL modem. Quit or pause everything you can, including background services. If your cable modem is in an inconvenient location, you can add firewall rules to restrict traffic. Here’s an example where I used the IP address of my laptop and then blocked all traffic on the LAN interface that didn’t match that IP.

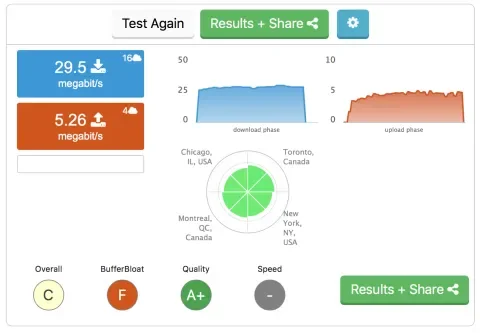

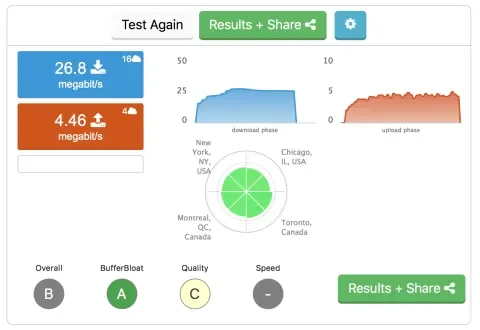

I really like the DSLReports Speedtest. It’s completely JavaScript based, so it works on mobile devices, and includes a bufferbloat metric. This is what it shows for my 30/5 connection, along with a failing grade for latency.

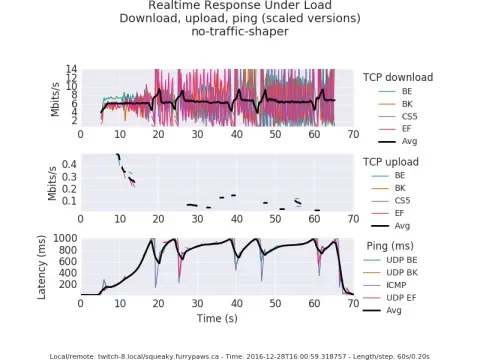

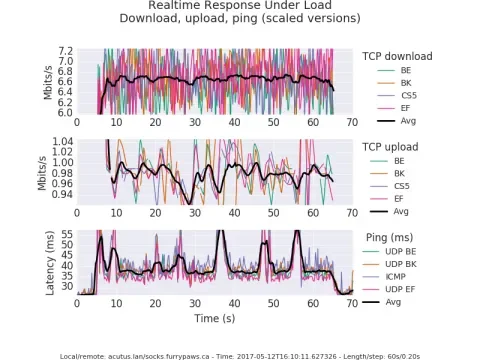

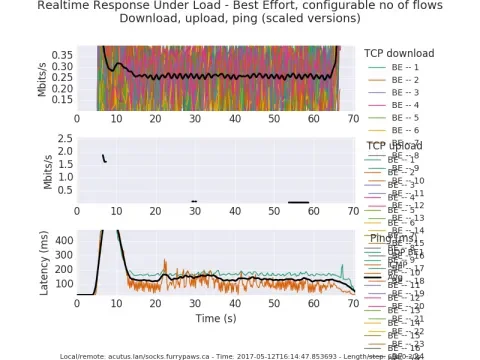

A good CLI alternative is speedtest-cli, but you will have to run a ping alongside it by hand. I also used flent extensively, which provides nice graphs combining bandwidth and latency over time. It requires a server to test with, so it’s not for everyone. Its real-time response under load (RRUL) test is more intense, testing with 4 up and 4 down flows of traffic, combined with a ping test. We can see how badly my connection falls apart, with almost 1000ms of latency and many dropped packets.

$ flent rrul -p all_scaled -l 60 -H remote-host.example.com -o graph.png

Run your chosen test tool a few times, and find the average of the download speed and the upload speed. Keep those numbers, as we’ll use them in the next step.

If your upload speed is less than 5Mbit/s, you might find that FQ CoDel performs poorly. I was originally on a 25/2 connection, and while the performance was improved, VoIP still wasn’t usable under load. Luckily, my ISP upgraded all their packages while I was setting things up, and with a 30/5 connection, everything works as expected. For slower upstream connections, you might find better performance with manual QoS rules, even though it takes much more effort to maintain.

Setting up FQ CoDel

It might not seem intuitive, but at its core, QoS is a firewall function. It’s the firewall that classifies traffic and accepts or blocks it, so the firewall is also the place to mark or modify the same traffic. In OPNSense, the settings are under “Traffic Shaper” inside of the Firewall section. One important note is that while OPNSense uses the pf firewall for rules and NAT, it uses ipfw for traffic shaping.

In OPNSense, the traffic shaper first classifies traffic using rules. Each rule can redirect traffic to a queue, or directly to a pipe. A queue is used to prioritize traffic. Finally, queues feed into pipes, which is where we constrain traffic to specified limit. While this is the flow of packets through your network, it’s simpler to configure pipes, then queues, and finally rules.

1. Configure pipes to limit bandwidth

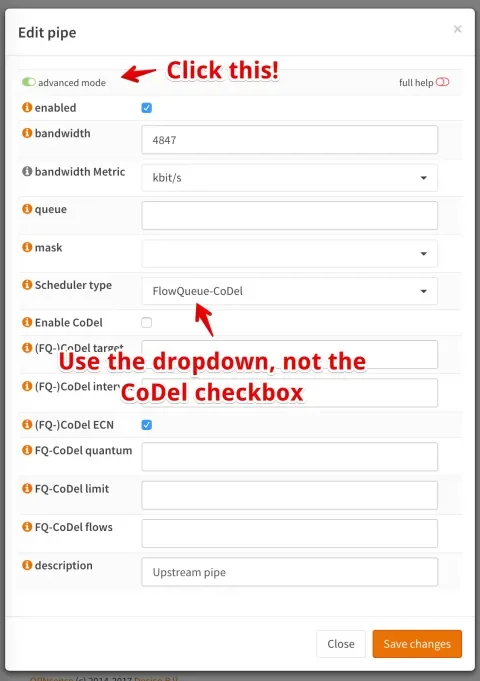

This is where we use the results of our earlier speed tests. We need to configure two pipes; one for upstream traffic, and one for downstream traffic. While other methods of QoS recommend reserving 15-30% of your bandwidth as “overhead”, with fq_codel around 3% generally works well. Here are the settings for my “upstream” pipe. Use the same settings for your downstream pipe, adjusting the bandwidth and description.

“ECN” stands for Explicit Congestion Notification, which is a way to let other systems know a given connection is experiencing congestion, instead of relying on just TCP drops. Of course, ECN requires enough bandwidth for upstream packets to be sent reliably, and your ISP would need to respect the packets. I’d suggest benchmarking with it both on and off to see what’s best.

2. Queues for normal flows and high priority traffic

We could skip queues and have rules wired up directly to pipes. However, I achieved lower latency by pushing TCP ACKs and DNS lookups above other traffic. To do that, we need to create three queues.

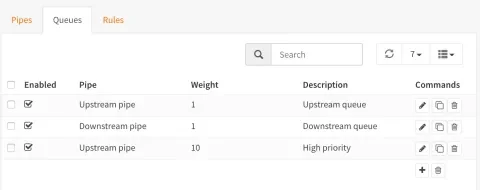

- An Upstream queue for regular traffic being transmitted, with a weight of 1.

- A “High priority upstream” queue for ACKs and DNS, with a weight of 10. In other words, give high priority traffic 10 times the bandwidth of everything else.

- A Downstream queue for inbound traffic, with a weight of 1.

Don’t enable or change any of the CoDel settings, as we’ve handled that in the pipes instead.

3. Classification rules

Finally, we need to actually route traffic through the queues and pipes. We’ll use four rules here:

-

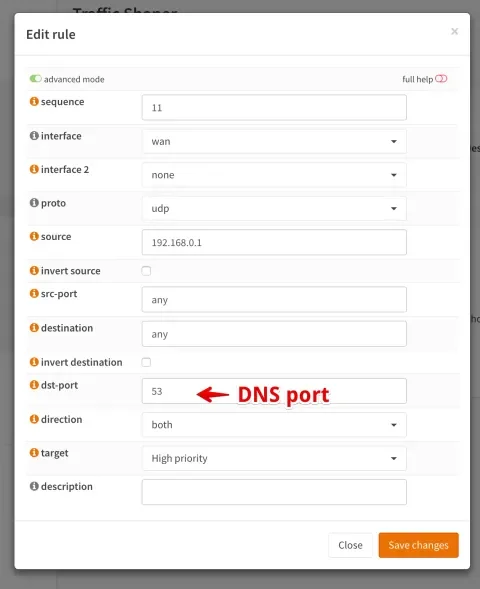

Mark DNS as high priority, but only from our caching DNS server on the router itself. We do this by setting the source IP to our router in the rule.

-

Mark all upstream traffic to the upstream queue.

-

Mark upstream ACK packets only to the high priority queue.

-

Finally, mark inbound download packets to the download queue.

Here's an example for the DNS rule:

Click “Apply” to commit all of the configuration from all three tabs. Time to test!

Look for Improvements

Rerun your speed tests, and see if latency is improved. Ideally, you should see a minimal increase in latency even when your internet connection is fully loaded. While lower round-trip-times are better, as long as you’re under 100ms VoIP and video should be fine. Here’s what the above rules do for my connection. Note the slightly lower transfer speeds, but a dramatic improvement in bufferbloat.

Here are the flent results - I didn’t block other traffic when generating this, but note how the maximum latency is around 60ms:

There is one important limitation in fq_codel’s effectiveness; while it can handle most scenarios, this can be significant latency with hundreds or thousands of concurrent connections over a typical home connection.

If you see latency spikes in day-to-day use, check to make sure a system on your network isn’t abusing its connection. A successor to CoDel, Cake, might handle this better, but in my basic tests using LEDE it didn’t.

It’s clear that CoDel is a dramatic improvement over manual QoS or no QoS at all. I look forward to the next generation of AQM algorithms.